Table of contents

- Intro

- What is online malicious behaviour?

- How does IT world deal with the problem? Classification.

- Internet disinformation - the most common problems

- Fake News

- Fake Reviews

- Hoaxes

- Malicious users of the web

- Vandals

- Trolls

- Sockpuppets

- Fake Influencers and Followers

- Conclusion

- Authors

- About SentiOne

Chapter 1 Intro

Malicious behaviour seems like a very capacious phrase. It contains all activities that are somehow harmful to the society or considered anti-social. In real life, there are special forces, supported by psychological research, to take action in the ongoing battle with such acts. On the internet, however, there are too many users, too little boundaries, too much innovation, and too short history.

In this whitepaper, you will read how to recognise and deal with:

• fake news,

• fake reviews,

• hoaxes,

• vandals,

• trolls,

• sockpuppets,

• fake influencers.

These issues have become a global problem because their consequences can be destructive not only on a personal level but also on a political scale and business-wise. We should all know how to protect ourselves and our companies from danger lurking on the web. There’s

more to internet safety than privacy issues and school awareness programs. Luckily, computer scientists dived into this vast research material. Their studies have become the basis for developing tools and AI solutions detecting anti-social activities, about which you will read further as well.

Chapter 2 What is online malicious behaviour?

Every now and then social media walls shake because of incredible news. Almost unbelievable. That you can lose half of your weight in two weeks with just this one weird technique. Or that the CEO of a big company reveals that you just have to do one thing to become a millionaire. Or that yet another celebrity died. People click and share this kind of information because it toys with our emotions. Sometimes we fall for it, sometimes we think it’s ridiculous right away. Either way, nobody likes to be fooled and yet, we experience it more than enough.

Why does it happen after all? The ant-shakers issue is probably as old as the humanity. For many reasons, but mostly because of unspoken problems with creating healthy relationships or lack of attention from the close ones, people would cause chaos. Like for instance shake an ant-farm just to watch the little insects run frantically for their lives. It is said that this way people subconsciously scream for attention and empathy. Without a doubt, the problem concerns not only the web, however, anonymity and interactivity may increase anti-social behaviour online. Or at least increase the probability of being faced with it.

But there’s a difference between misinformation and disinformation. In a nutshell, the first happens when someone is generating or duplicating false information without knowing that it’s not true. The other is doing it deliberately. And that is the key to understanding malicious behaviour in general, not only online. Obviously, it is a very complex issue containing a lot of symptoms, but putting it briefly, it’s acting intentionally in a way that is not accepted by society. So we can say it’s like with jokes. There’s a thin line between a good joke and a bad one.

The most reproduced cartoon from “The New Yorker” by Peter Steiner,

originally printed 5 July 1993.

Even though the web 2.0 era is on the wane, as we can see the first signs of artificially intelligent web 3.0, but the full transition will probably take a while, given the history of previous change. The first blog with the possibility to comment posts was set online in 1997 but the term was coined by O’Reilly Media in 2004. If we agree that a new era started somewhere in between these dates, we can say that it’s been about twenty years since the internet started developing from a read-only library to interactive web of unlimited connections that we live in today. That means it’s getting natural for the audience to not just absorb the news but also react. Some people don’t even remember the times before. A possibility to discuss on forums, blogs, portals has become a necessity.

Then again, as it happens, there’s the other side to the situation. A lot of businesses suffered from either trolls or fake reviews. Some spent money supporting fake influencers whose supposedly huge reach turned out to be a sham. It’s not easy to put down social media crisis with today’s speed of news spreading. On the internet, there’s hardly any presumption of innocence. The consequences of someone’s scam may hit your brand image hard, even if you are completely innocent.

According to the survey provided by PewResearch Center, 73% of adult internet users have witnessed harassment online. This number shows that finding tools to deal with aggression online is very much needed, though complex, issue. The fact that we have more and more automated solutions, is probably one of the most significant signs of a new fundamental change in internet history. And one of the biggest perk of living on the verge of web 3.0.

“73% of adult internet users have witnessed harassment online”

Chapter 3 How does IT world deal with the problem? Classification.

There are a lot of malicious behaviours on the web. Not that long ago, the internet population suffered greatly from viruses or hacks, which of course still happen, but the technology went far enough to prevent most of them. At least those that were done just for fun and not by professionals. It could be said that the SPAM problem is solved for the most part thanks to big data analysis and antispam algorithms that create patterns from the data we have. Nevertheless, there is still a lot to work on, and the market is very dynamic as the creativity of online users is unlimited, even if it comes to misbehaviour.

Of course, there’s a lot more to the subject but from this text you will learn how to identify probably the most popular issues related to false information that circles around the web causing trouble in many different ways. They can be approached with the technology based on natural language processing which is one of the most innovative fields of computer science these days. Not that long ago a lot of language-oriented research was founded on rule-based systems or taggers created by people.

This works well with written text or in languages with highly specified syntax structures, but in case of speech or languages with more complex grammar, machine learning and neural networks come in handy.

There is a lot of research around the world that is aiming to deal with malware or other online inconvenience. Even though the technology based on artificial intelligence is developing fast and is making a huge difference, we’re still faced with many different kinds of “bad jokes” on the web. Besides, it’s not like we all have AI at our disposal to spot fake news, trolls or the internet fluff we’re not even aware of. So first things first, we should define the issues. Knowing what we’re dealing with, when talking about malicious behaviour,

would be the first step to shield yourself.

The experts have designated a few basic aspects and categorized them into two main groups:

1.Disinformation:

• Fake Reviews

• Hoaxes

2.Malicious users:

• Vandals

• Trolls

• Sockpuppets

• Fake Influencers and Followers

Chapter 4 Internet disinformation - the most common problems

Have you ever played this game with telling the lie from three stories? One of the stories a person tells is supposed to be fake, and

two others real but unbelievable enough to compete with the fabricated one. You’ve got to tell all three of them and the listeners are supposed to spot the fake. The success of figuring out the truth depends on the narrator, the stories themselves, obviously, but mostly on the face-to-face contact. It is hard enough, even though people can spot liars based on micro-expressions. Pointing out what’s fake in written text is that much harder.

More and more people (in 2012 it wasabout 70%) rely only on social feed with getting their morning news. That’s probably the most significant reason we become victims of antisocial behavior. It happens that even qualified journalists and media professionals pass on news that hasn’t been verified. Probably because of the speed of life and information overload.

That is why we’re experiencing two opposed tendencies on web nowadays – one part of internet users take the information they find on the web as undeniable truth without even considering double-checking and share it, while the second part – discouraged by spreading false information – keep their distance which leads to huge decrease of trust levels in society.

Chapter 5 Fake News

Sharing online articles that don’t have much to do with the truth is a burning problem these days. Social media became source of the stimulation for the issue. Although it’s a hot topic now and may seem like a modern issue, it started offline centuries ago.

We have a long history of spreading rumors designed for political or religious reasons.

By the end of XIX century illiteracy around the eastern civilisation was reduced significantly which surely was a big step to the humankind. However, the situation had some side effects. It lead to the start of media democratisation. More and more people could read so newsmen started to match the content to lowering expectations of the public. Quantity begun to win over quality. These were the early stages of tabloid journalism, also called yellow journalism because of the colour of the ink, that the first newspapers counted to this type, were printed with. Joseph Pulitzer’s New York World and William Randolph Hearst’s New York Journal were the first ones to wage a business war. To draw more readers, ergo sell more circulation, they used to scandalise information. The newsmen would use controversial headlines written in large, bold letters, supported by big illustrations. But most of all they made a history by spreading information based on preposterous logic, pseudoscience, and shady anonymous sources. They would however always root for the little fellow to buy themselves the sympathy of the poor and unfortunate mass – a wide target group. Therefore, the history of fake news has much to do with the evolution of mass media.

Source: http://www.brownstonedetectives.com/wp-content/uploads/2014/09/seq-1.jpg

The online equivalent to these eye-catching newspaper headlines are called clickbaits. Research shows that people hardly ever read the entire article. However, the headline is supposed to be tempting for the readers to at least click and get the ads exposure, for the

portal owner usually gets paid for pageviews. The more scandalised or unbelievable the headline, the better chance of hitting more

clicks. It works SEO-wise as well. According to linguistic studies on fake news headlines, they are much longer and contain more emotive

vocabulary compared to verified truth. Such research also lead to developing automated systems that spot fake news with the 86% accuracy (compared to 66% by people).

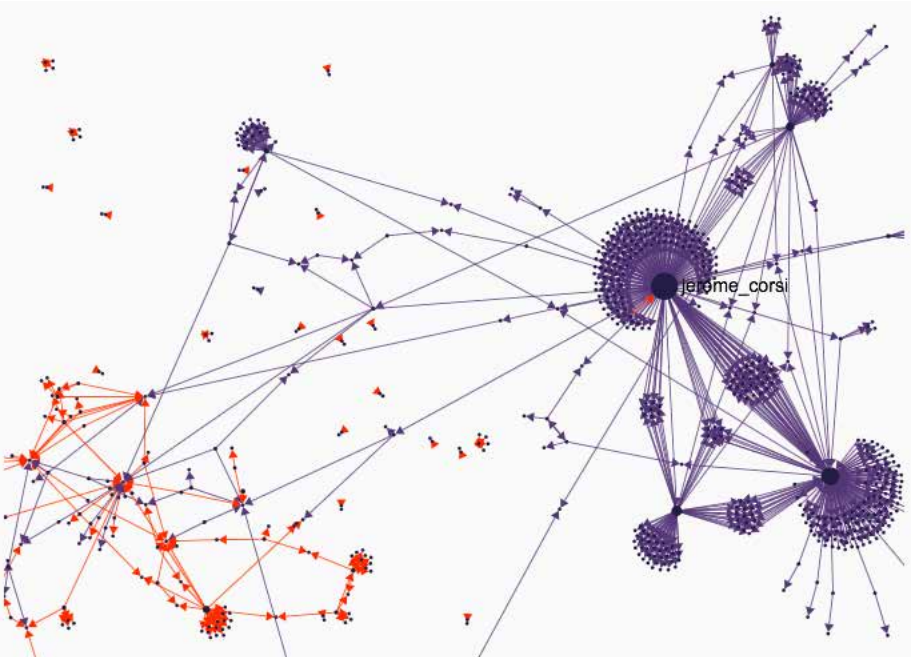

Unless you have AI technology at your disposal, there are recommended portals like Snopes or FactCheck.org that handle the verification processes of dubious content. You can also try Hoaxy, the platform that provides spread visualisations of claims versus verified news. Here’s a preview of how information about Barack Obama are being shared online.

Apparently, true news (orange) have more of an organic spectrum of sharing, while clear epicentres can be seen for fake articles (violet).

There are more of distinctive traits noted by experts to help us spot fake news. After the issue raised, there were guides created.

Facebook released Tips to Spot False News (not “fake” because of political correlation with the phrase) which was followed by a fierce discussion as social media are considered one of the main causes for the fake news widespread. One of the most popular online infographics on the subject was made by the International Federation of Library Associations and Institutions and is free to download in over 30 languages.

While double-checking articles, even according to the rules above, you must never lose focus. There are a lot of sources that mimic reliable portals. So make sure you don’t fall for the trick as they may bear a sticking resemblance to the original ones.

Like these two:

It is also recommended to take a look at the domain. With the example pictured above, there’s a credible news provider abc.com versus fabricated one abc.com. co. The difference between these domains is, as can be seen, subtle. To make sure you aren’t drawn into misinformation, you can check some URLs at the Real or Satire platform.

We run some tests on the tools that are supposed to help in spotting fake news, and as they cover most of malicious domains and articles, they are not foolproof, unfortunately. Make sure you check the claim from different angles. If still in doubt, you may try to confront somebody in a discussion to get more perspective and some new points to the subject.

However, note that some news may receive echo chamber effect, which means that it would be acclaimed more by the supporters of the theory and censored in certain circles. If you want something to be true, it’s not hard to find a source favourable to your thesis so don’t fall for confirmation bias, even though it’s not easy to think outside the box.

Key takeaways

More and more people (including journalists) spread non-confirmed news, especially on social media.

Remember to double- check before you share; use AI-based tools as they are more precise than humans.

Make sure you use at least a few of the tips above and beware of confirmation bias.

Chapter 6 Fake Reviews

Online ratings are becoming the source of information for most of the internet users. Whenever we wish to get a non-biased opinion on

a restaurant, hotel, film or book, we go and look at the stars it got on credible portals designed to collect the voice of the customers. From time to time, we actually read a few of the top reviews but mostly look at the average score. The web, which contains unlimited space for opinions of people from all around the world, is supposed to be the most uninfluenced place to look for advice. The bigger the statistical sample, the more reliable the source should be, for the crowd can’t lie. That’s probably why, according to the Nielsen Global Trust in Advertising Survey, in 2015 66% of respondents from all around the world claimed to trust the consumer opinions posted online. However, some people found a way to meddle with online reviews.

Fake reviews might either upvote or downvote a certain product or rated item, depending on the intent. The person who orders fake reviews might either want to increase their own conversion rates (which is probably the more common situation) or decrease their competitors’. It seems easier than it should be, however quite costly business. And although we’ve been dealing with fake reviews for almost ten years, it still stays a burning issue despite some action taken against it. The problem of fake reviews hits the biggest players at the largest scale. Platforms like Google Play, Amazon, or Yelp suffer the most. Luckily for software engineers, it occurs as a huge base to train neural networks and develop automated detection systems.

Psychologic and linguistic analysis shows that there are a few distinctive traits of fake reviews:

1. They happen to be more opinionated and shorter than the real ones;

2. Their rating pattern tends to be more bimodal;

3. Fake reviewers collude with each other;

4. They seem more specific for the behavioural patterns than linguistic.

It is said that for the common people it’s almost impossible to sow the real reviews from the well written fake ones as the authors tend to change the style and match it to the current needs. There are two most popular online tools to spot fake reviews, mostly on Amazon: Fakespot and ReviewMeta. The platform’s creators, however, reserve the right to be wrong. Unexpected things happen. Some films or books may get the unusual amount of opinions because of an event or public recommendation by a celebrity, which would mess with the automatised system.

Key takeaway

Take everything with a grain of salt. Remember that you may disagree with even the most honest opinion about a product you just bought, let alone a whole bunch of fraudulent.

Chapter 7 Hoaxes

When on 30 October 1938, CBS radio network aired the unusual play based on The War of the Worlds novel, nobody expected it will soon get this famous. The play interrupted a music program and was aired as a series of news broadcasts, which supposedly ended in a panic attack on a mass scale. Regardless of the actual size of the panic, this sure is the most famous media hoax so far.

In the context of online malicious behaviour, a hoax is considered a type of fake news. The basic distinctive feature seems that it’s mostly created for satirical purposes. It plays the role of an online practical joke. The term is related to the witchcraft semantic field, as it’s supposed to be a shortened variation of magic incantation hocus pocus. Nevertheless, it still applies as misinformation since it is created deliberately and is supposed to simulate the truth. According to Stanford University research, hoaxes are usually simple and repetitive. They may impact the web notably as some of them last quite long, therefore receive significant traffic, however, contain fewer links or invalid links. On that basis, there was an AI algorithm developed that can detect hoaxes with pretty impressive precision.

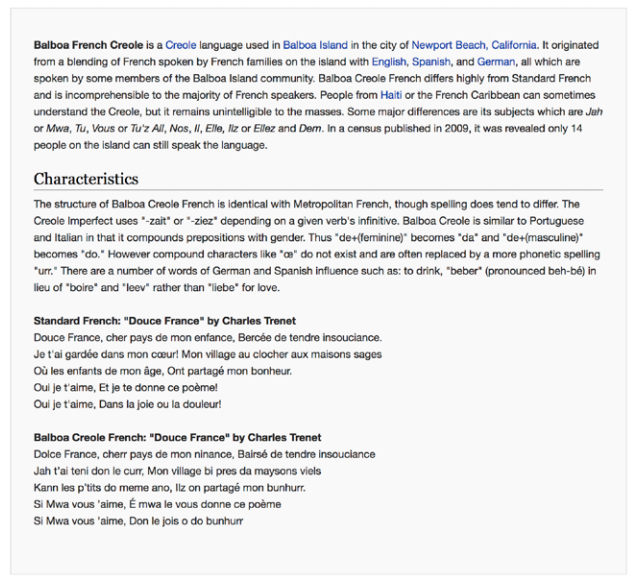

Therefore, Wikipedia analysts created a list of hoaxes for keeping the resources for machine learning systems. Curiously, a lot of the listed articles stayed online for years (up till twelve years!), even though they were completely fictitious. Like for example the article about the Balboa French Creole language that’s been sitting online for two years.

Key takeaway

Make sure you double-check the information you share, even if it doesn’t seem odd at first sight.

Chapter 8 Malicious users of the web

As it was mentioned before, the anti-social behaviour is probably as old as the world itself. Wherever people get, some sort of ill will takes place. The disinformation isn’t spreading on its own, after all. Regardless of the intent – whether it’s supposed to be a simple prank or conduct a new political advantage – online malicious behaviour happen and there are people behind each. There are specific sorts of internet users named after the type of online malice they practice.

Chapter 9 Vandals

Just like in real life, internet users sometimes behave anti-socially just for the sake of the act of destruction. Online vandalism touches mostly portals that are based on free collective work. Whenever a portal or website is open-source it is exposed to people contributing in a negative way too. It may refer to deleting some parts of the content, adding non-constructive parts or editing in a damaging way.

The reasons behind such behaviour might be miscellaneous. It is believed that for the most part it’s supposed to be a joke, although performed by a not too subtle person. Sometimes vandals are self-named vigilantes who make an input to prove a certain point. However, from the perspective of the rest of the society, this input is non-constructive so it is against the policy and idea of open source collaboration.

Internet vandalism is – non-surprisingly – common to Wikipedia. It is free to edit by all users of the web, so it happens quite often that some articles are being destructed. About 7% of edits on Wikipedia are considered the acts of internet vandalism. This leads to a big struggle for Wikipedia moderators – whether they should restrain the freedom of contributing.

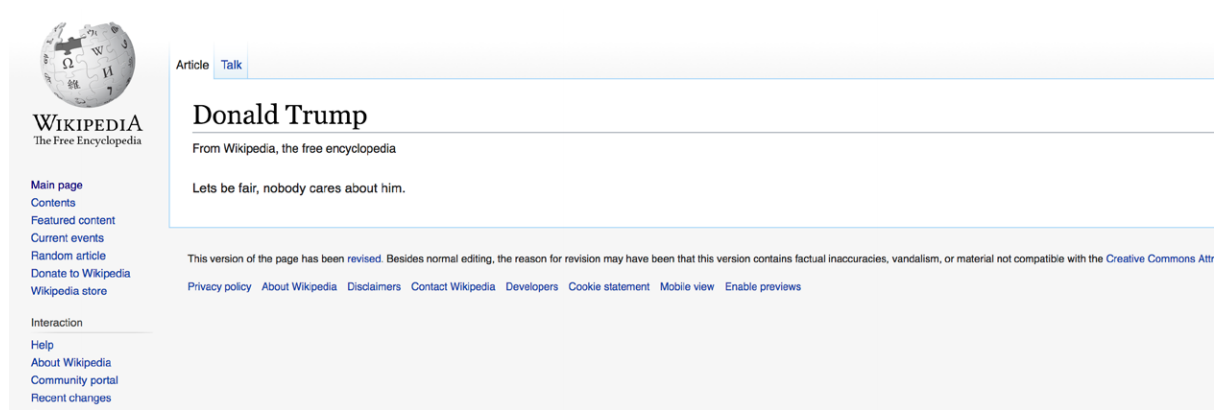

Of course, vandals happen to increase their activities according to trends. The 2016 election in the USA came along with a whole list of internet malicious behaviour, a few of them being spiteful edits of the main opponents’ information. There were some pornographic pictures and a message from a trolling organisation added to Hillary and Bill Clinton’s pages.

Also, Donald Trump’s entire Wiki information was deleted and replaced with just one sentence. Software engineers make the effort to develop automation technologies for detecting vandals and their activities on the web. There are bots that scan article edits, automatically detect harmful contents, and warn users who are potential vandals, as a part of Wikipedia’s four-step warning system.

ClueBot NG is one of the most productive bots created in 2010 by Wikipedia users Christopher Breneman and Cobi Carter to detect users who edit articles badly. It is created as a combination of machine learning and statistical techniques based on Bayesian theory. As many of early AI inventions, this bot also met some criticism. It’s said to be imperfect, as it sometimes overestimates the value of Wikipedia edit and requires the article’s content to be too impersonal for human standards.

[By Wikimedia Foundation, CC BY-SA 3.0, https://en.wikipedia.org/w/index.php?curid=41391488

Key takeaway

The best performing solution for detecting internet vandals is a combination of metadata, text processing and human feedback, so report a non-constructive edit, whenever you spot one.

Chapter 10 Trolls

This type of malicious users of the internet is probably the most widespread and well known. Obviously, there are a lot of different kinds of trolls, some of them might even not realise that what they do is called trolling and is truly annoying to others. They are the people who would stir up the online discussions just for the sake of it. Their common name comes from Scandinavian mythology where the troll is a mean and vicious but not too clever woodland creature. There’s a whole spectrum of online trolling – from people who simply wouldn’t go without a spiteful comment to truly sociopathic ones that would offend and verbally assault anyone. It is said that internet trolls share some traits from a certain personality type that are socially unacceptable.

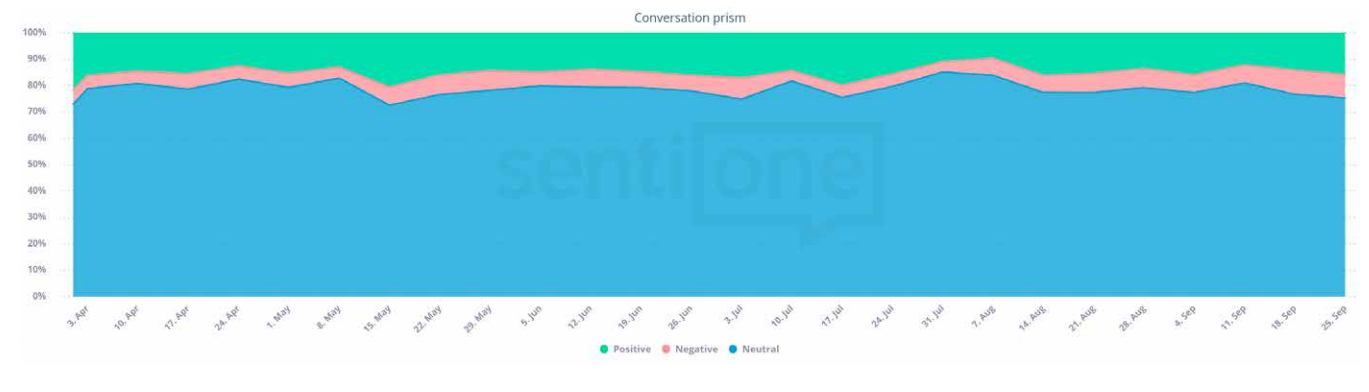

This issue may seem insignificant, as we can always simply ignore irrelevant comments that are written clearly to mess with us, but in fact, it’s quite a problem for moderators of websites, forums or portals. For example, CNN is forced to delete about 21% of comments because they are offensive or irrelevant. At this scale, for big brands looking after their online reputation, it might be difficult to identify trolls. The solution to that problem might come with SaaS tools that provide text analysis which estimates the sentiment of brand mentions. For example, SentiOne provides one of the best sentiment analysis in up to 27 languages

You get the charts with estimated sentiment analysis and an alert in case of the sudden increase of negative mentions. You can click through the chart directly to mention analysis and see for yourself whether it’s a crisis or troll attack. If you suspect trolls, you can ignore all mentions written by the particular author and compare the results.

Research based on internet comments show some similarities amongst the ones written by trolls.

Compared to non-trolling, they contain:

- 3x more swear words

- 47% more contents in general

- 9% less similar content to previous posts by that particular author

- 8% less positive words

- 2x as many replies

So basically, trolls provoke other internet users to get involved in discussions and they do it effectively. Even if it’s not in the most sophisticated way. Their posts are usually roughly (if somehow) related to the main topic, aggressive, and suspiciously wordy. And yet, people get triggered and reply statistically two times more often than in non-violent conversations. This may lead to a lot of anger and frustration.

The only sound solution is not to get involved in a discussion that is doomed to fail in the first place when you see someone is getting on your nerves.

Key takeaway

If you want to avoid wasting your time on trolls, do not feed them!

Chapter 11 Sockpuppets

This is how the online world calls the inappropriate use of alternative accounts on websites, portals, forums, blogs or any other place where discussions take place. People create fake accounts and answer, share or like their own comments just to create the illusion that they have group support. Since it is hard to know for the third party whether the comment is written by another person, puppeteers might even fool them. Some of the marketing actions might balance on a verge of sockpuppeting and for that reason are considered controversial. Actually, the idea precedes the internet. It is known that some artists and politicians used to write themselves reviews for papers under pseudonyms. So public acclamation was faked even when it wasn’t called sockpuppeting.

Depending on the technique and purpose of creating a fake online account, there are different types of sockpuppetry:

• Strawman is the sockpuppet that aims to create negative sentiment around certain statements by discouraging the readers.

• Meatpuppet is an actual person hired to produce online content in a chosen subject. Just as clappers in theatres used to do.

• Ballot Stuffer would vote multiple times in online polls to increase the chance of winning for the puppeteer.

Research shows that sometimes it is not that hard to spot this sort of malicious acts on the web. First of all, sometimes the puppeteer forgets to switch accounts or gets logged in automatically and their comment gets published under the original name. Unless this happens, there are few characteristics shared by sockpuppets caught guilty. More often than not, they would have a similar username, login time and IP address. Besides, with language expertise, it was proven that sockpuppets write shorter sentences, address others directly more and get downvoted more than average internet user.

Key takeaway

Whenever you get involved in a fierce discussion on the web, check the usernames and login times, because even though it may feel like you argue with a whole bunch of people, it may be just one puppeteer.

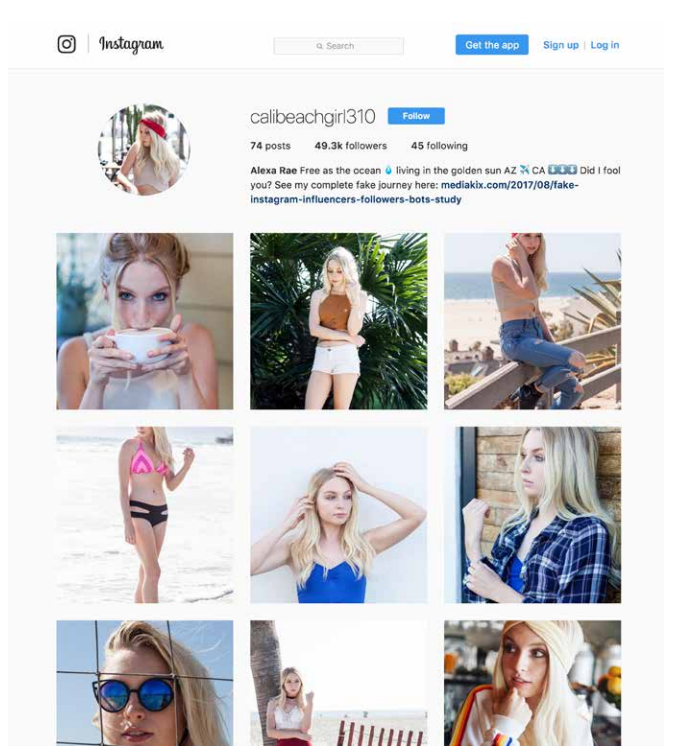

Chapter 12 Fake Influencers and Followers

These are two different types of malicious users but they are inseparably conjoined. Given the user growth on Instagram, it is forecasted that the influencer marketing business on this platform only may reach $2 billion next year. Becoming a social media influencer may occur to some people as fast way to earn easy money, click and follower farms pop up like mushrooms.

Marketing specialists want to grow their reach and address the right audience with the help of influencers. Nonetheless, they tend to pay no heed to what they pay for so it became pretty easy to fool them. Like in the experiment where influencer agency created two fabricated Instagram accounts, bought likes and engagement for about $300, and within just a few days secured first deals with big brands for either money or free products.

Luckily, for the most part, the amount of likes is not a key measurement factor for your ROI anymore. A wise marketer knows that it is the true engagement (the fake one can be bought, obviously) that keeps the conversion rates up and provides the retention.

The more ethical way to buy focused attention of potential customers for brands is cooperation with influencers. How to tell the real one from the fraud? There are a few easy steps:

- Check the followers. The real influencer with a loyal audience should have a big number of followers but earned in an accordingly long period of time. The number should also relate to the amount of followed accounts and original posts by the author. There are influencers who get their status just by being invasive, reposting and gathering an audience, following a lot of people while creating no personal content (i.e. the “follow for follow” method).

- Inspect the position in the field. A real influencer has the authority based on personal expertise and is admired for that by the other industry representatives, which can be seen by direct mentions in discussions on a certain subject.

- Observe the engagement. Even though comments can be bought on “farms” as well, they are distinguishable from the real ones. They differ on a linguistic levels, and most of all are unlike one another when it comes

to length or emoji usage. For the vloggers, the number of film views may seem like a good factor. - Take the note of the web. Put the potential influencer’s name in Google search or other social media and find out how many followers duplicate in different sources. The numbers should be close. There’s hardly any chance to become an influencer on just one channel.

To discover influencer’s reliability, use online mention analysis tools (like SentiOne) to track their reach.

![]()

Key takeaway

While considering working with influencers, always make a background check to see if likes were bought on fan farms. Of course, none of the tips above are supposed to be treated like a complete method solely. Please, keep in mind the principle of sound research.

Chapter 13 Conclusion

Although we live in a world of unlimited possibilities when it comes to connecting all over the world, remember never to let our guard down. The possibilities may fire backwards as some of the internet users are not playing fair. It is said that online cons can go as far as to influencing elections in major countries.

There are tools that can determine the authenticity of certain information. We need to remember, though, that this software – however sophisticated the algorithms – isn’t perfect. When you release an automation that’s supposed to recognise a sockpuppet, the malicious

user is probably way ahead. It’s not that hard to fool a machine, after all. The most promising way out tech-wise are probably machine learning systems. Which need a lot of data to learn from. So at the end of the day, the human touch and mindfulness are much needed.

To sum up, there are lots of things that we need to be aware of on the web. Even though IT world is taking the effort to fight malicious acts, it’s still a long way to prevent them. What we can do, is probably taking action on a day-to-day basis by reporting infringements, not engaging in discussions that feed trolls and sockpuppets, not share articles without double-checking, etc. To do so, we must never cease to learn about what can be harmful to us and our companies. Not to spread fear but to know how to dodge the bullet.

Chapter 14 Authors

Michał Brzezicki – CTO and Co-founder of SentiOne, software engineer, economist, traveller. In his recent work, he is focused on leading the Research Team at the company in the direction of developing AI systems supporting current SentiOne functionalities.

Katarzyna Kempa – content creator at SentiOne, linguist, NVC evangelist. As a writer in a tech company, she tries to find

harmony between her creative and analytical, sci-fi-loving selves.

Chapter 15 About SentiOne

SentiOne is a social listening and online reputation management platform. The company was founded to help people discover and understand online conversations with advanced yet user-friendly tool. Today, SentiOne is tested by over 15000 brands all over the world and gathers billions of online data in multiple languages to provide clients with actionable insights.

The analysis includes: sentiment analysis, reach estimation, geolocation, and many more functionalities providing social proof.