The magic behind the curtain: an overview of voicebot technology

Voicebots are the future. This might sound rather self-aggrandizing, coming from a voicebot company, but it’s true – and we have the data to back it up. The mass adoption of voice assistants such as Siri and Alexa paved the way for voicebot technology to be used in a wide variety of specific applications.

While they don’t get as much attention as our daily companions, there is a wide variety of voicebots in a lot of different businesses. They are an invaluable tool in customer service, for instance! As an example, a voicebot we implemented for the Polish financial company KRUK helped reduce their call centre volume by 23%!

It is, in part, thanks to this newfound popularity that we are publishing this article. We’ll take you behind the curtain and show you what exactly makes voicebots tick and why they are so sought after in today’s business world. Ready?

What is a voicebot?

A voicebot is a computer program operated through conversation. There’s even a term for it: “conversational user interface”. For decades, talking to your computer was primarily a staple of science fiction and not a practical reality. Remember HAL 9000?

“I’m afraid I can’t let you do that, Dave.”

What Kubrick and Clarke demonstrated in 2001: A Space Odyssey wasn’t as far removed from reality as people usually like to think. In fact, voice synthesis, a fundamental part of voicebot design, was being worked on long before electronic computers were invented. By the time HAL 9000 captivated movie audiences in 1968, computers were singing songs for nearly a decade.

In fact, computers could recognise human speech back then, too – with severe limitations, but still. In 1952, Bell Labs demonstrated the “Audrey” system, an enormous beast of vacuum tube circuitry that was capable of recognising digits from human speech. It couldn’t do much more than that, but if you really tried, you could dial a telephone number with Audrey.

The third piece of the voicebot puzzle is natural language processing. This is the machine learning technique through which computers can derive meaning from words and sentences. This, too, has been in the works for the better part of the twentieth century. In 1966, ELIZA was released – this program is often considered the very first chatbot. Through very basic language analysis, it could simulate a psychotherapy session – and it *almost* seemed humanlike!

Combine these three ingredients in a magic cauldron (or a whole bunch of technical universities), let them cook for a couple of decades, and presto – voicebots.

If we define them as “computer programs that do things based on human speech”, then the first commercial applications for the technology came in the late 1980s and early 1990s. These programs were used for dictation and could handle tens of thousands of words.

While useful, this was about as much as computers of the era could handle. This rapidly changed in the 2000s, as PCs became more and more powerful. Suddenly, text-based chatbots running as a service could handle tens of thousands of users simultaneously (you can read more about chatbots in our guide to chatbots!). Adding voice processing functionality on top of that was a logical progression.

That’s how we ended up with a wide variety of voicebots. We have virtual assistants, like Siri and Alexa, that can handle a wide selection of tasks: checking the weather, sending text messages, playing music. We also have application-specific voicebots: they route calls, provide technical support or perform various services specific to the company running them.

How are voicebots built?

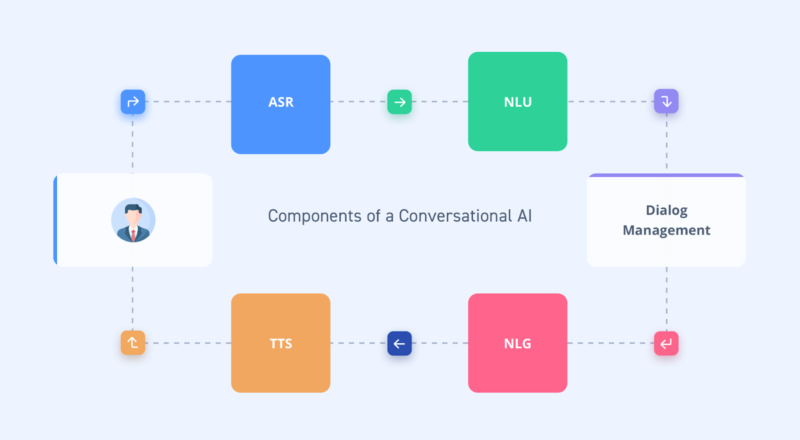

We already touched upon the key elements of a voicebot: speech recognition, natural language processing, speech synthesis. While these sound like complicated technologies, they are becoming increasingly accessible. By now it’s possible for essentially any company to roll their own voicebot using a platform such as SentiOne (cough, cough).

In fact, we’ve already written a comprehensive guide to voicebot implementation (part 2) – so in the interest of not repeating ourselves too much, here’s the Cliff’s Notes.

In order to develop a voicebot, you first need to define its functionality. What problem do you need it to solve? How will you measure its success? What metrics will you use?

Secondly, analyse the processes you will pass onto the bot. Can they be defined as a series of specific actions that need to be performed in order?

Thirdly: implement and test an initial version. Unless you have the resources for an entire voicebot R&D division, you’ll be using a platform like SentiOne. This allows you to build a proof-of-concept implementation extremely quickly.

At this point, you start training your language recognition algorithms. Every company is built differently, and every customer is different. Take five people, and you’ll find fifteen ways of asking the same question. Using the power of machine learning, your bot will learn to recognise all of them – and respond appropriately.

Once you’re satisfied with the performance of your initial implementation, scale up the deployment. Go slow and steady – if you initially trained your bot using twenty different people, go up to a hundred, then a thousand. It’s vitally important not to throw yourself into deep water from the start – this is where you’ll catch any errors in your scaling. Once you’re satisfied your bot can potentially handle your entire client base at once – that’s when you launch.

The key technologies used in voicebots*

Let’s circle back and focus on the technologies some more. We understand the basic ideas: let’s go deeper. We’ve asked our engineers to explain the principles behind the magic that makes voicebots possible. Here’s what I learned from them:

Automated Speech Recognition

Automated speech recognition (ASR) has been a field of continuous study since the late 1950s. By 1962, Bell Labs had created a prototype device called Audrey, capable of recognising digits in human speech. It wasn’t until the 1990s that the technology made major strides, though – largely due to evolving language models and increasing processing capabilities in contemporary computers.

By the early 2000s, personal computers were able to perform speech recognition. Windows XP, released in October 2001, came with a myriad of accessibility features – such as voice control, using speech recognition software designed by the company Lernout & Haspie. The same technology later formed the basis for Apple’s Siri.

Text-to-Speech

Text-to-speech technology, also referred to as “speech synthesis” actually predates the invention of the electronic computer. A working prototype of a simulated human vocal tract was built all the way back in 1837!

Like we mentioned earlier, by the 1960s we were making computers sing. Granted, they still sounded distinctly different from humans, but as time went on, so did the technology. Although you can still tell Siri isn’t a real person, she is just human enough for us to develop an emotional bond with her.

This psychological phenomenon only proves how important good text-to-speech technology is!

Natural Language Understanding engines

Despite the popularity of artificial intelligence as a concept (thanks, science fiction), the phrase “natural language understanding” is still somewhat unknown to most people. It is exactly what it says on the tin: through NLU, computers “understand” written text.

This is one of the oldest branches of AI research, with first proof-of-concept implementations dating back to the early 1960s (STUDENT). NLU engines underwent largely the same evolution as other techniques: primarily theoretical until computers got exponentially faster in the 1990s and 2000s.

Bridging NLU enginges to voicebots brings with it new challenges: after all, we feed speech-to-text data to it. In casual conversation, we rarely use well-structured speech, like we would when writing a letter. NLU engines therefore have the additional job of parsing through very unstructured speech, recognising idioms and slang, and using that data to deliver accurate results.

Machine Learning

Through machine learning, computers improve their performance over time. It is one of the most important aspects of modern voicebots.

It is through machine learning that bots can learn to understand a wide range of accents and voices. The big advantage of machine learning is that it never stops – the data set continuously expands as the voicebot continues working. Once a certain threshold is reached, the bot can adapt to new words, voices and accents without any input from its developers – like something straight out of a science fiction film.

Machine learning can (and is!) applied to every stage of voicebot development. Because of this, our speech recognition systems can easily learn to understand extremely varied types of voices and accents, our NLU engines make less mistakes and our speech synthesis accurately creates words it has never encountered before!

Top voicebot technology providers

In the world of software development, there is a concept referred to as “the Unix principle”. The name, obviously, comes from the Unix operating system – which, in various forms (such as GNU/Linux) is still used to this day to run essentially most of the world.

The Unix principle is very simple: your program should do one thing, and do it well.

The principle is as follows: programs should be simple, predictable modules that can be linked together. That way, the user can mix-and-match the tools they need to solve their particular problem – without having to develop an entirely new application.

Developing modern voicebots is very similar: why waste time reinventing the wheel, when various companies are already solving the problems required to create a bot?

These technology providers provide some – or all – of the parts necessary for a company to roll their own bot.

SentiOne Automate

Forgive us for being immodest, but we can’t start a roundup like this without mentioning our proudest accomplishment. SentiOne Automate is our all-in-one platform for designing, prototyping and hosting enterprise chatbots and voicebots. Our intent recognition model is currently the best in its class – and we’re constantly hard at work to improve our accuracy even further.

VoiceFlow

VoiceFlow is a cloud platform for building conversational apps across voice channels. Founded in 2018, this young company has worked for some of the biggest names in the tech industry, including clients such as Spotify, Google, and the New York Times.

Techmo

Techmo is a Polish company that provides speech synthesis technology, as well as voice recognition and analytics. Their technology can be deployed both in the cloud as well as on-premise, which makes them an ideal vendor for a wide range of potential solutions.

Rev.AI

Rev.AI offers an advanced audio transcription engine, trained on a database of over 50,000 hours of real conversations. Their technology also offers an incredibly robust API, which allows it to be easily integrated into any existing business solution.

Google, Amazon, Microsoft

Finally, there are the tech giants. All of these companies provide well-established technologies that can be used when developing your voicebot: NLU engines, automated speech recognition, speech-to-text systems… You name it, they have it. They also provide cloud services on which you can host your applications.