New state-of-the-art intent detection model from SentiOne

What is intent detection?

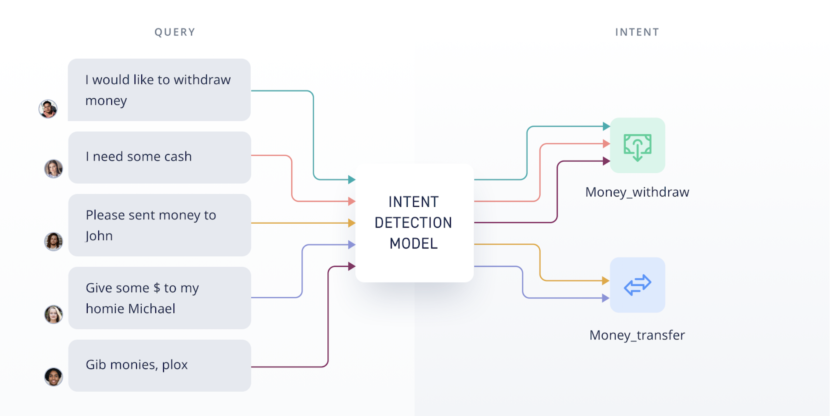

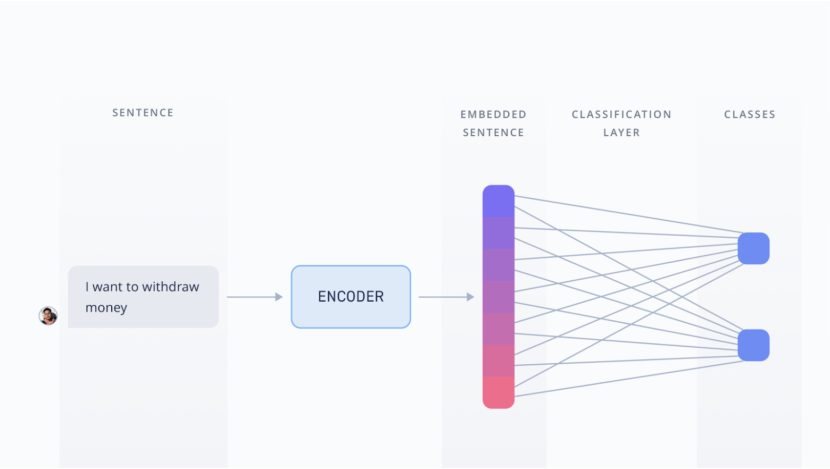

Intent detection is a text classification task used in chat-bots and intelligent dialogue systems. Its goal is to capture semantics behind users’ messages and assign it to the right label. Such a system has to, given only a few examples, learn to recognize new texts that belong to the same category as the ones it was trained on. This can often be a thought task as users tend to formulate their requests in ambiguous ways.

Why is intent detection important?

Intent detection is a crucial component of many Natural Language Understanding (NLU) systems. It is especially important in chat-bots as without intent detection we wouldn’t be able to build reliable dialog graphs. Detecting what a user means is the primary function of any intelligent-dialog system. It allows the system to steer the conversation in the right direction, answer users’ questions, and perform the actions they want to achieve.

Our model

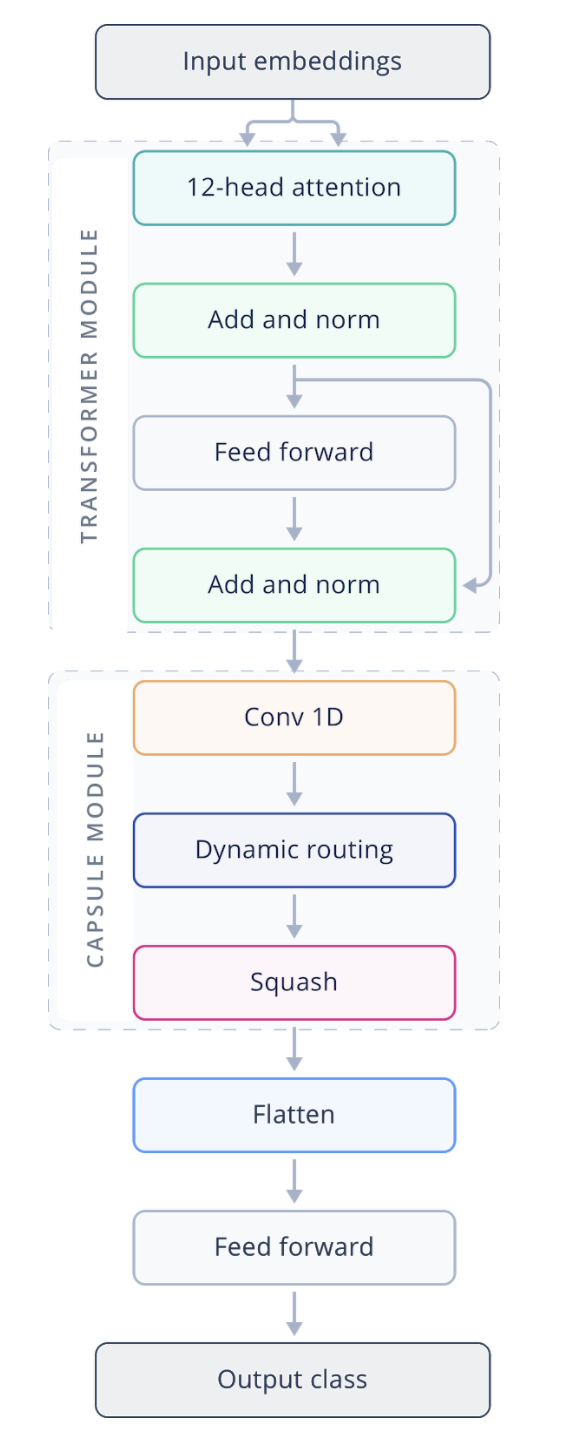

The architecture of our intent detection system model is based on a Transformer encoder and Capsule Neural Networks.

Transformer

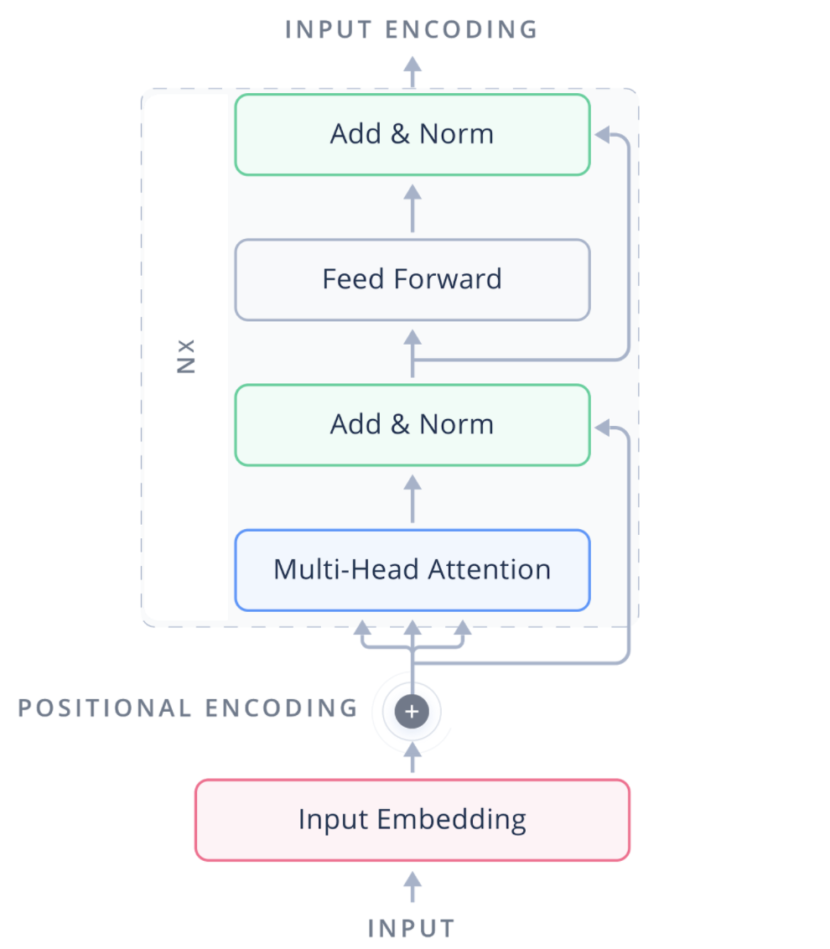

Transformers were first introduced in 2017 in the paper “Attention is all you need”. Ever since they have been used in famous language models like Bert or GPT-3 as well as other state-of-the-art art NLP models.

They use attention mechanisms to encoder how relevant one word is to another word in the analyzed sequence. Transformers often come with multi-head attention. Each of the “heads” looks at the analyzed sentence in a different context. This enables the transformer to provide accurate multi-context sentence embeddings (vectors) for the processed sequence. This vector can later be used by the classification layer that assigns a label to the analyzed sequence.

Capsule neural networks

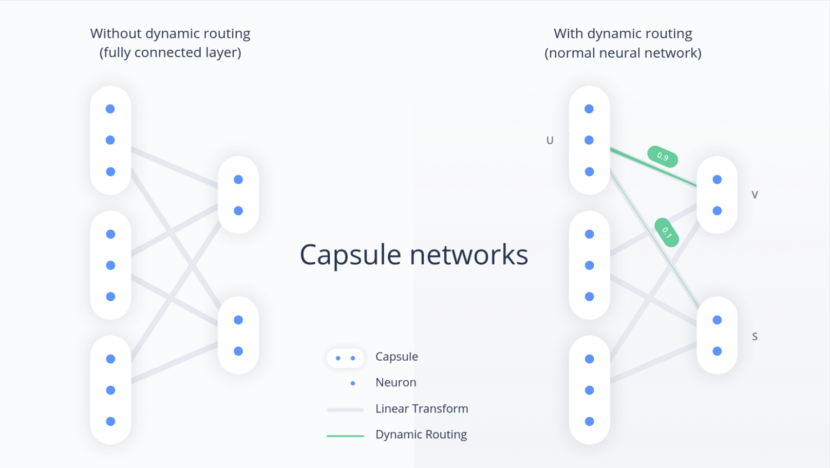

Capsule networks, primarily used in image recognition, are new types of neural networks introduced by Geoffrey Hinton, who is described as the ‘godfather‘ of deep learning. Their idea is based on grouping neurons representing similar features together in so-called capsules. Each capsule can also be interpreted as a vector whose orientation represents the features and whose length represents the probability of those features appearing.

Another difference between Capsule Networks and standard neural networks is Dynamic Routing. This algorithm promotes capsules that are more in line with the capsules in the next layer. This means it discourages the detection of features that are not useful to the next layer while encouraging the detection of features that are useful to the next layer.

Our architecture

Our architecture uses the encoder part of a Transformer model and combines it with capsule neural networks. We use 12-head attention in the transformer module which results in a sentence vector created by analyzing each word in the sentence in 12 different contexts. This vector is used as an input to the capsule module consisting of 2 capsule layers designed specifically to work with text format. In this module, neurons are grouped into 15-dimensional capsules. Between the two layers, dynamic routing is performed 4 times to take into consideration useful features while still putting some weight on the less important ones. We later use the embedded sentence analyzed by capsule neural network in the classification layer to match the user’s message with the right intent.

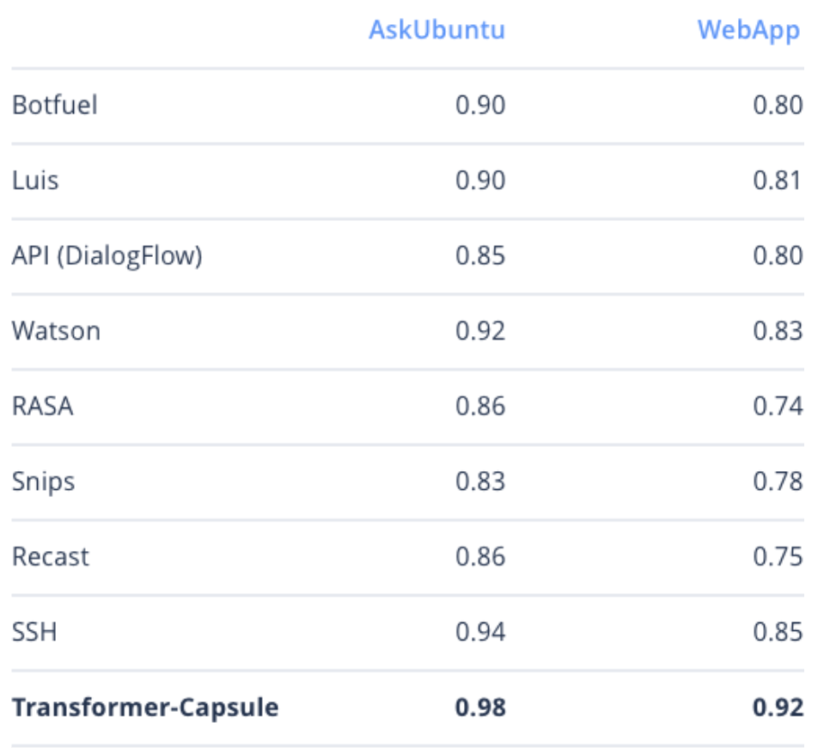

Comparison and results.

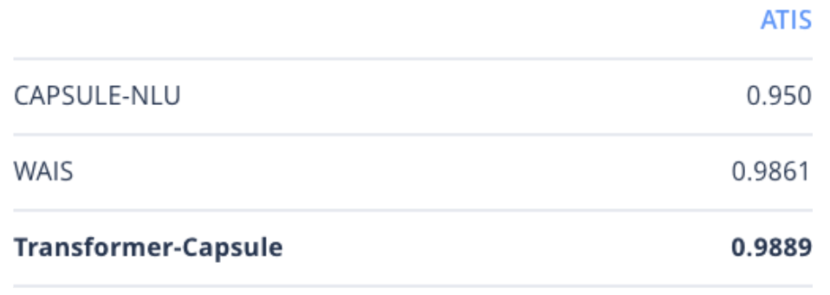

We tested our results on 3 different public intent recognition datasets. The first two datasets (AskUbuntu and WebApp) are smaller datasets with only a few examples per intent. On those datasets, we compared our popular NLU models used by other chat-bot companies as well as Subword Semantic Hashing (SSH) that was the previous best solution for this dataset. We can see that our model achieves state-of-the-art results outperforming the previous best by a large margin.

The third dataset is Airline Travel Information System (ATIS). This is a larger dataset with more than 4000 examples for training. Here we compared our solution with previous state-of-the-art (WAIS) as well as the original implementation of capsules in the NLU system (CAPSULE-NLU).