RAG: How does Retrieval Augmented Generation revolutionize Conversational AI

The field of Conversational AI has experienced a paradigm shift thanks to the advent of Retrieval Augmented Generation (RAG). Traditional chatbots and conversational agents have long relied on predefined responses and limited contextual understanding, often falling short in providing accurate and relevant information.

However, RAG, a novel approach that combines the strengths of retrieval-based and generative models, is set to revolutionize how machines engage in dialogue. By dynamically retrieving pertinent information from vast datasets and seamlessly integrating it into natural language generation, RAG enables conversational agents to deliver more informative, coherent, and contextually appropriate responses. This article delves into the mechanics of RAG, explores its impact on Conversational AI, and examines the potential applications and future paths of this groundbreaking technology.

What is RAG and how does it work?

Simply put, Retrieval Augmented Generation (RAG) is an AI framework which can be used to enhance the performance of large language models by allowing the use of an external or internal database as the primary source of information to provide more precise answers to given queries.

The biggest improvement compared to the sole usage of an LLM is the ability to retrieve specific information from a database of our choice. Combined with the capabilities of large language models, the resulting output turns out much more accurate and contextually relevant.

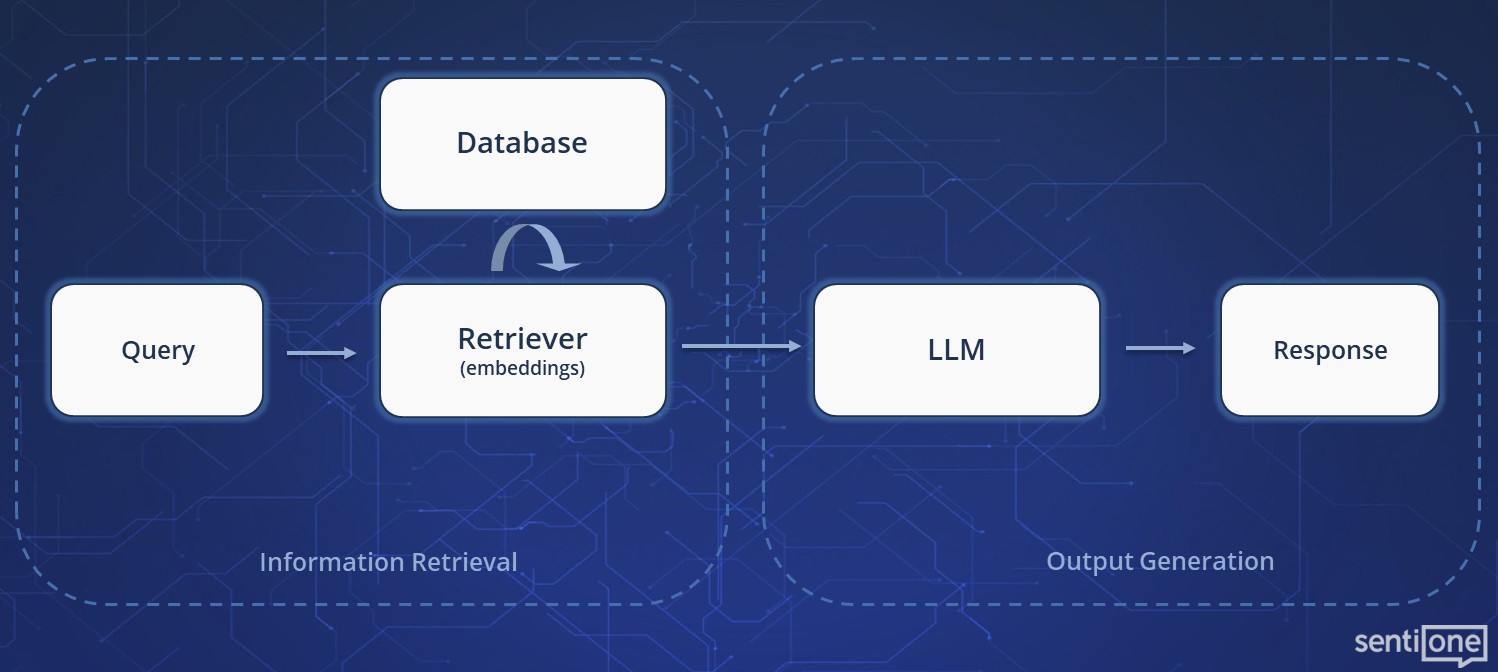

As for the mechanics, RAG combines two core techniques: retrieval of relevant information and generation of a response on its basis.

In the retrieval phase, it searches the provided language corpus to find relevant documents or pieces of information. This step ensures that the model has access to specific, up-to-date, and contextually relevant data. To explain this step by step, the dataset that we want to be the source of information must be indexed, it needs to be organized in a way that will allow the search to be quick and efficient. For that purpose, various techniques like tokenization, embedding generation or use of search indexes may be used. The very same is also done for the query. When the embeddings for both the database and the query have been created it is then possible to perform a similarity search. Through this the information from the dataset that is semantically similar to the query is being identified. Finally, the retrieved data is analyzed for its relevance to the given query. In the second phase, the selected information is forwarded to the generative model to produce a coherent and contextual response.

Advantages of RAG

One of the most valuable advantages of RAG is higher accuracy in answer generation. By accessing external sources of information, RAG can verify and supplement data, reducing the risk of errors and misinformation. In practice, it means that instead of relying solely on pre-trained data, RAG model dynamically reaches out to current and diverse knowledge bases, such as articles, documents, tables, or other resources. This ensures that responses are more accurate, up-to-date, and contextually aligned with user queries (responses are adapted to specific industry or sectors).

Simultaneously, RAG is capable of processing larger amounts of data in real-time in contrast to traditional answer generation systems that are limited to the information contained in their static databases – RAG can dynamically search and utilize external information sources in response to user queries. This means that it can effectively manage large and complex datasets, providing quick and accurate answers regardless of their volume.

By accessing external sources of information RAG reduces risk of errors and hallucinations. One of the key issues in traditional answer generation systems is the risk of generating incorrect (hallucinations) or imprecise information. RAG, with its approach based on real-time processing and access to external sources, significantly reduces this risk. The ability to verify data in real-time and use current and reliable resources ensures that answers generated by RAG are more trustworthy and accurate, minimizing the risk of errors and misinformation.

Practical applications of RAG

Abovementioned advantages make RAG an extremely versatile tool and applicable in multiple industries. In medicine, RAG can support medical staff by providing up-to-date information on the latest research, therapies, or medications. In the banking sector, RAG may be used to analyze large financial datasets. It could also serve as a tool for personalizing banking services, tailoring offers to individual customer needs. In telecom, it can assist in managing and analyzing large datasets from companies’ networks. It might support troubleshooting by accessing a knowledge base on the latest technologies and standards. RAG can be also incorporated in many fields to aid in vast data analysis as well as content creation. Whether used for internal purposes or for conducting business, it can become an indispensable tool for any data-related work.

With its ability to gather and analyze large datasets, RAG brings chatbots and virtual assistants to another level as it allows for quick retrieval of relevant information and consequently improves the general user experience. The responses provided by this powerful tool are accurate and informative. Paired with the contextual awareness, the conversation becomes more natural and satisfactory as the user is presented with a fast and straightforward answer to any query.

For chatbots, incorporating RAG means enabling them to better perform one of their core functions of providing information. A bot equipped with RAG will be able to quickly navigate various data such as rules and regulations or web content and come up with a precise answer that would otherwise be hard to find by the user. Additionally, the bot will also be able to point where the information was found so that the user can read more upon the topic if they wish to do so. With this, the message generated by RAG becomes concise as the listing of sources enables finding further explanations to the given query. Not only does this serve to improve the users satisfaction but it also proves the bots credibility.

Challenges of RAG

The implementation of RAG comes with several significant challenges that can impact its widespread adoption across industries. One of the primary issues is the additional computational costs. Integrating generative models with retrieval mechanisms requires substantial computational power which increases costs and demands advanced technological infrastructure.

Another challenge is the need for large and well-organized datasets. In the context of RAG systems, input quality plays a crucial role in achieving high precision of the generated responses. The data used for RAG must not only be accurate and up-to-date but also properly structured. Poor quality can lead to errors or incomplete results. It is essential that the information is carefully processed, cleaned of noise and duplicate information.

We should pay attention to the format in which the data is stored, as this can significantly impact the system’s performance. Markdown is one of the popular text formats used for storing documentation in a manner that is readable both by humans and machines. Thanks to the simplicity of its syntax, it is easy to create well-formatted texts, tables, headings, or lists, which facilitate the subsequent processing by RAG. Tables in Markdown format allow for a clear presentation of data, making it easier to identify.

Another significant limitation in RAG systems is the token limit, which refers to the maximum number of textual units (tokens) that the model can process simultaneously. When preparing data for RAG, it is important to optimize the content, eliminating redundancies, to fully utilize the system’s capabilities.

Implementation of RAG at SentiOne

SentiOne Automate allows its users to incorporate RAG into their bots in a simple manner. The platform possesses a built-in vector database engine which can process given data and by means of semantic search finds more precise answers to user queries.

To implement RAG in SentiOne Automate, the first and most important step is to prepare the dataset which is to become the source of information for the bot. As it was already mentioned, it is crucial to have a well-structured database as its quality is a significant factor in the bot’s performance. Once it is done it can then be freely used in the bot using the RAG system. SentiOne Automate uses advanced generative AI models, allowing users of the platform to customize their bot by defining its tone, style and overall character so as to decide whether it should always be polite and professional or perhaps should be more casual and keep the conversations light. Detailed instructions can also be provided to ensure that the bot answers in a particular way and formats the responses to be most accessible for the customers. The platform has ready-to-use integration blocks for LLM models, which makes creating RAG bots that much easier and requires little to none programming effort. These LLM blocks allow the user to choose between the latest models from OpenAI, specify the context to be considered for given queries and make use of other features to ensure the natural flow of the conversation.

SentiOne Automate takes RAG’s bot solution to the next level. By combining precise search with natural response generation, it enables the companies using the platform to not only improve the quality of customer service, but also automate some of the more complex processes.

Conclusion

RAG is a powerful tool that has the potential to reshape the landscape of Conversational AI through its unique blend of the retrieval-based methods with generative models. This combination results in the delivery of more accurate, contextually aware, and coherent responses. Despite some challenges like increased computational costs and the need of well prepared, extensive datasets, the possible applications of RAG across a variety of industries are vast and transformative. SentiOne Automate is already implementing it in its solutions and over time, as research and technological advancements continue, RAG is likely to become an integral part of the future of Conversational AI and other data driven technologies.