How to Successfully Manage Chatbot Security Risks

Table of contents

For years businesses steadily introduced new AI-enabled chatbots as more companies realised the compelling benefits of customer support automation. It was business as usual, no big news. And then ChatGPT launched, bringing with it a bright media spotlight on chatbot capabilities. People marvelled at ChatGPT’s range and quality of answers. Business leaders accelerated their focus on chatbots and AI applications. But unfortunately, it’s a sad part of our reality that innovations attract bad actors. It wasn’t long before hackers and scammers were exploring every method of subverting ChatGPT, in some cases with sufficient success to give business leaders pause on their ambitious chatbot plans.

If you have responsibility for selecting customer support tools for your organisation, you need a firm grasp of the potential, but also an informed view of the security risks. ChatGPT is a wonderful tool, but as we’ve explained, generative AI isn’t built for business use cases, such as customer support. Of course, going for a conversational AI provider instead won’t solve all your issues. You should still look for a provider that can deliver a secure experience, allowing you to receive the business benefits of customer service automation while alleviating risks. In this instalment of the AI Bots – A Manager’s Handbook Series we’re going to help you get a clear view of chatbot security risks and understand the best solutions and practices to address them.

Why Is Chatbot Security Important for Businesses?

A conversational AI chatbot is a great way to improve customer satisfaction and retention, especially in interaction-intensive industries like banking, insurance, healthcare, and utilities. Naturally, the more sensitive your client information, the more risk you face.

While your chatbot can be a tremendously efficient support tool for your customers, it can also be an attractive target for hackers and scammers. Chatbots often have a direct pipeline to customer data through system integrations. Also, in authenticated chat sessions, clients can submit valuable personal information through a chat interface. Ensuring secure transmission of client data through your chatbot is essential to avoid legal and financial risk.

There is a reputational risk as well. Your chatbot is a representative of your company’s brand, and someone (maybe you?) is accountable for every client-facing word it produces. Client service mishaps risk new exposure in our digital age. Most customers won’t record their phone conversation with your customer support staff, but a bad chat experience can be cut and pasted into a social media post with a few clicks.

Examples of Chatbot Security Risks

Here are some of the chatbot vulnerabilities and chatbot threats hackers aim to exploit.

Prompt injections: One chatbot exploit involves prompting the chatbot to role-play while following the user’s directions. For example, prompting ChatGPT to respond using the perspective of a fictional character with no moral restraints. This chatbot injection method enables the user to subvert the usual guidelines and engage the bot in conversations involving racist language, conspiracy theories, or suggestions of illegal activities such as building explosives. Chatbot SQL injection is an attempt to trick the chatbot into treating a malicious command as part of the information submitted, causing the chatbot to execute that command.

Malware Distribution: There are several ways hackers are using AI chatbots to help develop and distribute malware. For instance, programmers use AI chatbots to create software to steal information, develop ransomware, and build Dark Web marketplaces for trading stolen information and illicit goods. To compound the risk, threat actors can dupe I chatbots like ChatGPT into recommending code packages that contain malware to other users, leading to further spread as those developers build new software.

Dataset poisoning: This chatbot threat involves adding hidden prompt injections in the source material that the chatbot learns from, which could include public web pages. This technique introduces both the risk of hateful or corrupt messages being processed and used by the bot, but also harmful code that can execute functions. Chatbot security vulnerabilities are particularly dangerous at the intersection of chatbots that integrate with 3rd-party services and APIs. In one chatbot phishing example referenced in MIT Technology Review, a security researcher hid a prompt on a website he created, then visited that site using a browser with an integrated chatbot. With no user action beyond visiting the website, the chatbot located and executed his prompt injection (a fake scam pop-up offer designed to solicit credit card information).

Data leakage and PII breaches: Chatbot users are in some cases inputting highly confidential PII (personally identifiable information). Many chatbot platforms are cloud-based, third-party vendor solutions sitting in between a company’s infrastructure and its clients. Inadequate authentication, security protocols, or data protection measures can lead to the exposure of highly sensitive client information either through error, negligence, or deliberate chatbot attacks. This is a risk for all companies, but particularly poignant for those handling financial data, and any company bound by General Data Protection Regulation (GDPR).

Data vigilance is required for messages delivered by your company’s chatbot as well. Sensitive company data or intellectual property can be inadvertently shared with customers by an inadequately trained chatbot.

Other Risks and Ethical Concerns with AI Chatbots

We’ve highlighted some of the chatbot security risks associated with deliberate foul play from hackers and bad actors. The unique benefits of AI chatbots (as opposed to simpler rule-based chatbots) bring additional ethical issues to consider.

Inaccuracy and misinformation: A generative AI chatbot (unlike a conversational AI one) can give you a completely inaccurate answer with the same confidence it tells you that 2+2=4. Chatbots like ChatGPT leverage a neural network of linguistic associations to choose (generate) what it calculates to be the most appropriate sequence of words. The generative AI can surely be creative or, rather, hallucinate. There’s no fact-checker in there. And the majority of its learning data comes from social media and the web. Unlike the web, where people are more sceptical of information (and more able to validate the context and source of the data), too many people assume that chatbots are all-knowing and accept their responses as fact.

As a potential provider of chatbot services for your clients, you need to account for the naivety of your audience and the possibility or prevention of them receiving false answers. Fortunately, most conversational AI solutions intended for enterprise-level clients allow you to carefully control the dataset that your chatbot is modelled on. By using an existing, well-trained model complemented by your internal company data, you can rule out the most common sources of error associated with chatbots.

Data governance and client expectations: A cloud-based AI chat solution creates an intermediary between your clients and your business. This brings many careful decisions about how sensitive data is handled and stored. It can be a grey area in terms of your commitment to your client’s data privacy. While it may be a fair arrangement based on your terms of use and client disclosures, are you acting in their best interest?

Unconscious bias: AI bots don’t hold a grudge against anyone, but without careful training against bias and the right dataset they can produce responses that are discriminatory or reflective of stereotypes. The risk is greater than hurting someone’s feelings; it can run afoul of anti-discrimination or “hate-speech” laws. It’s important that bot design teams are trained and equipped to recognise and flag bias as they train their chatbot model. As we’ve written previously, having diverse teams also brings more vigilance for unintended bias.

Supply-chain & labour inequality: Some chatbot providers have been criticised for using unfairly paid overseas workers to conduct training and testing on their products. The due diligence phase of the purchase cycle provides you with an opportunity to inquire about your potential partner’s offshoring arrangements. Similarly, as the field of artificial intelligence is 74% male-dominated, you may want to understand your software provider’s efforts toward gender inclusion.

Environmental footprint: According to the International Energy Agency, AI language models and data centres are responsible for 1% of all carbon emissions in the world. Knowing this, you may seek a chatbot provider whose sustainability and carbon reduction goals align well with yours.

How to Ensure Chatbot Security

Some of the security risks and ethical issues we’ve highlighted simply don’t apply once you frame your needs around the conversational AI chatbots developed for customer service rather than generative AI systems like ChatGPT. Nonetheless, opening a new public-facing channel for customer service holds risks in any scenario, and here are some of the best mitigations.

On-premises or private cloud deployment: In highly regulated industries like banking, insurance, and utilities, network architects usually prefer an on-premises chatbot or a private cloud solution because it contains data within the organisation’s infrastructure and allows the greatest level of oversight and control. As the owner of the server powering the AI chatbot, your company has full and exclusive access to chatbot data and conversation logs. Some additional benefits of an on-premise deployment include:

- Improved security and access controls: your chat data can be protected by the same shield of network security surrounding all of your business-critical applications

- Cost-control: Adding chatbot capabilities to existing infrastructure and maintenance routines can be cost-effective and reduce the need for outsourcing

- Performance optimisation: Your network team can choose hardware and infrastructure to provide optimal chatbot performance, likely using existing monitoring tools

- Customisation: When your bot is deployed on-premises or on private cloud, you have full control over custom features and app integrations.

Authentication and privacy: AI chatbots provide the best customer experience when they can reach into corporate systems and retrieve the account-level data needed to resolve a client’s issue. As with other client access points such as phone support or your web portal, this requires careful handling of identity-based handshakes for authentication and authorisation. Chatbots and voice bots can use external authentication methods such as biometric scans (iris, facial, or fingerprint) and two-factor authentication via integrations. Additionally, a chatbot can be set up to verify a client ID and other personal data (e.g. date of birth, mother’s maiden name) within the chat. There should be nothing here that your network administrators can’t handle – the trick is aligning it with the policies and practices of other channels to create consistent omnichannel security.

Encryption: End-to-end encryption is ideal for ensuring that only the sender and your authorised employees can see any chat message. However, if you expand to channels on third-party platforms, you may encounter obstacles. For example, chatbots deployed to WhatsApp can relay encrypted messages to your chatbot, but messages sent through a Facebook chatbot cannot. That information passes through Meta’s servers in a human-readable format. For that reason, it’s important to consider which third-party tools you connect and deploy your chatbot to.

Ensure Chatbot Security with SentiOne

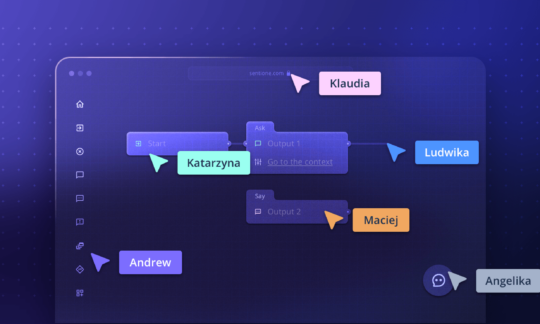

SentiOne Automate is an AI chatbot platform designed for enterprise-level customer support. We have both the experience and the product options to address chatbot security risks. In addition to our cloud-based model, Automate is available as an on-premise solution or in a private cloud. By connecting your internal company datasets to the chatbot training process, we help clients build NLU models to control the narrative and how the bot speaks, the prompts that it will answer, and the accuracy of the bot’s knowledge.

SentiOne Automate is available through a subscription model or software-as-a-service. To explore the potential ROI benefits of customer service automation for your company, try our chatbot ROI calculator or talk to our team.

Article Summary

The article discusses the importance of managing security risks associated with chatbots and voice bots and provides an overview of the vulnerabilities and threats hackers exploit. It highlights risks such as prompt injections, malware distribution, dataset poisoning, and data leakage. The article also addresses ethical concerns like misinformation, data governance, unconscious bias, supply chain inequality, and environmental impact. The article also gives solutions to improving chatbot security.