Google’s AI is impressive, but it’s not sentient. Here’s why.

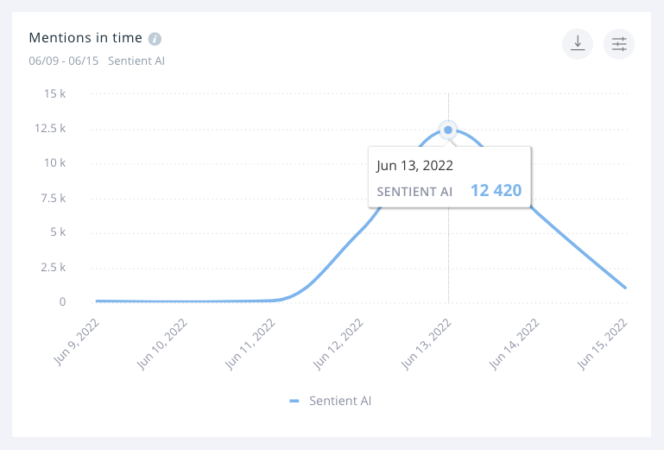

A particular news story has been on everybody’s mind this week: the story of a Google engineer who thinks an AI chatbot he’s been working on became sentient. After deciding to go public with his discovery, Google suspended him, ostensibly for breaking confidentiality agreements. By that time, however, social media and news organisations already picked up the story.

Last week has been marked by heavy discourse by both AI researchers and laypeople alike. As is the case with every tumultuous topic of discourse, getting to the bottom of things can be difficult — which is why we’re here. We’re going to untangle this matter as best we can, with the enthusiastic help of our own AI research team!

What AI are we even talking about?

The chatbot in question, LaMDA — which stands for Language Model for Dialogue Applications — is an unreleased chatbot application developed by Google. It’s trained on very large volumes of data: conversations between internet users.

In that manner, it’s very similar to SentiOne chatbots and voicebots. We also use significant amounts of text collected from the internet to teach our bots — it’s one of the best approaches to use if natural-sounding conversations are what you’re after.

In fact, that’s LaMDA’s main application: to provide realistic conversations. When Google unveiled it last year, a large amount of attention was paid to LaMDA’s capabilities.

How would we tell if an AI became sentient?

AI development is an old discipline. The “Turing test” has been proposed all the way back in 1950 by the man who many consider the father of modern computing: Alan Turing.

The test itself is simple: an examiner asks questions of two test subjects: one human, one machine. If the examiner cannot tell which answers come from the computer, then it is considered to have passed the test.

It’s one of the most well-known concepts in AI research, even by laypeople. It is also one of the most misunderstood — thanks in no small part to depictions and discussions of AI in popular culture.

The Turing test cannot, for instance, determine whether a computer is actually thinking. All it measures is the external behaviour of the machine — from its point of view, actually thinking and merely providing the illusion of thought is the same thing.

This brings us neatly to the primary objection to Lemoine’s claims. Is LaMDA actually sentient, or is it merely doing its job — being very good at simulating real conversations?

What do the experts say?

Plenty of experts point out that merely examining discussions with the AI is not enough to determine whether it’s actually sentient. The general consensus is that no, LaMDA hasn’t developed consciousness. It’s simply a really good chatbot, as explained in this blog post by Gary Marcus, founder of RobustAI.

Nonsense on Stilts.

A hot take on all this “OMG. LaMDA is Sentient” mania—and why the AI community is pretty united on this one.https://t.co/4IzUh35y9n

— Gary Marcus 🇺🇦 (@GaryMarcus) June 12, 2022

Other prominent figures in the AI community have also chimed in, including Mozilla’s senior fellow, Abeba Birhane, who referenced the trend of companies boasting about their “humanlike” models and algorithms.

we have entered a new era of "this neural net is conscious" and this time it's going to drain so much energy to refute

— Abeba Birhane @ #FAccT22 Seoul (@Abebab) June 12, 2022

I asked two members of our AI research team for their opinion. Agnieszka Pluwak and Jakub Klimek are responsible for our cutting edge NLP algorithms and models. Here’s what they had to say:

Dr Agnieszka Pluwak, linguist, NLU Engine Product Owner:

LaMDA only provides the illusion of talking to a real person. It achieves this by avoiding logical, semantic and grammatical errors (which would make you suspect it’s a machine) as well as by describing experiences that most people find relatable: spending time together with your family, for instance.

It does this because the system was likely trained on a data set composed of many statements by real people, as well as specially written dialogue examples. It’s nothing more than mimicry! Lemoine stated that LaMDA is sentient because it writes about feelings like people would. However, the ability to write about feelings is completely different than actually experiencing them.

Jakub Klimek, Head of R&D:

No matter how advanced they are, chatbots are still just language models. They’re trained on extremely large datasets composed of statements made by actual people — which helps them “imitate” people, but imitating statements is all they can do. They can’t, for instance, come up with new words and definitions: they lack creativity.

Obviously, true consciousness is much more than just creativity, to say nothing of sentience. However, that’s a matter for psychologists and neuroscientists.

It’s important to understand that no matter how advanced LaMDA is, it’s still just a language model. It only returns variations on its training dataset.

The question of LaMDA’s sentience might be settled, but that doesn’t mean the discussion is over. The discourse coincided with Google announcing a suite of over 200 tasks designed to measure computer intelligence, to which future contenders for the “first sentient AI” title will be subjected.

As for right now, well — the consensus is that we’re still a long way away from HAL 9000. That doesn’t mean our current AI solutions aren’t useful. Far from it! Our own chatbots and voicebots can help you optimise your customer service through the magic of automation. Interested? Get in touch with us and book your demo today!