Everything you wanted to know about bots in customer service

First impulse – why we build bots for customer service

At SentiOne, apart from our online listening and customer engagement tool, we work on improving human communication with the machines. However bold this sounds.

Several months ago, we undertook the challenge of building a platform supporting online customer service for one of the biggest financial companies on Polish market. We decided to focus on sales processes automation, customer service optimization in such areas as debt collection, helpdesk and other easily automated processes. What we have accomplished and are very proud of is a notable service time reduction. Our client can assist a single customer much faster and thus support even more requests thanks to the artificial intelligence system.

Given the experience of this implementation, we decided to share the knowledge gathered over the last months.

How our speech recognition system works

In order to get back to the customer with valuable answer from the bot, one thing should be perfected – we needed to understand the intentions contained in the question thoroughly. That’s why the speech recognition module we use is based on deep learning and is the state-of-the-art solution available in commercial concepts. This is a Large-Vocabulary Continuous Speech Recognition (LVCSR) solution. Sounds difficult? Well, in fact, it’s simple – it’s based on recognising natural language (which is the way we naturally speak) and not just specific formulas, as it is with first generation bots.

Speech synthesis

The bots we’re developing are not just the simplest ones that will answer you on the messenger with predefined phrases. Thanks to the speech synthesis solution you can talk to the robot, for example… on the phone. The tech-to-speech technology we use in Polish market is based on a solution provided by a Polish company Ivona Software – bought by Amazon, which uses the same solution in the Amazon Alexa. At the moment, it is one of the few solutions offering advanced speech synthesis in Polish, which allows for a smooth conversation based on designed dynamic dialogue scenarios.

Intelligent conversation

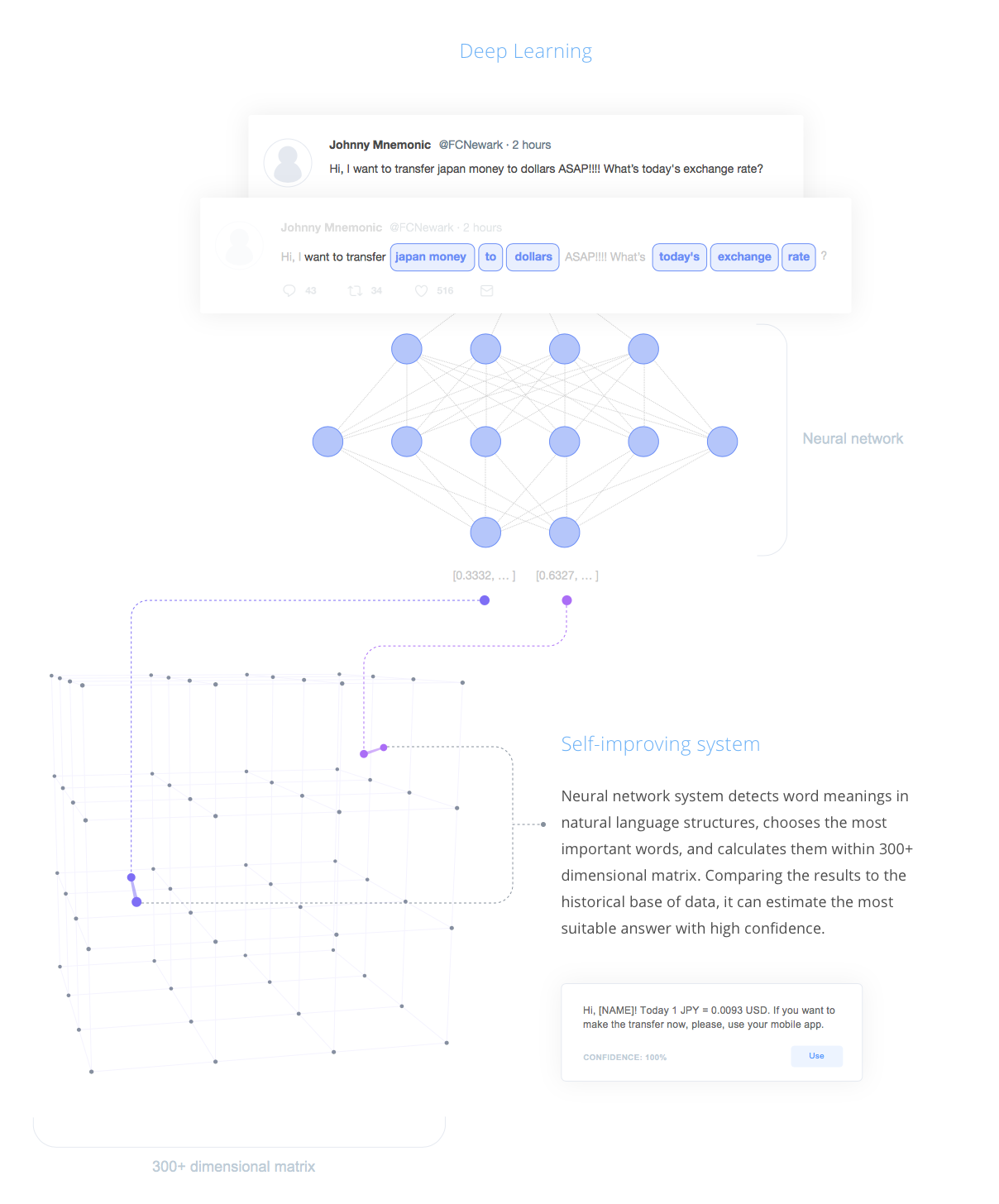

The SentiOne React AI platform contains an extensive natural language processing (NLU / NLP) engine. Based on machine learning using corpora and language structures, it enables recognition of the interlocutor’s intentions.

To put it simply, our artificial intelligence system is based on billions of public online statements, which come from the main area of our business – internet monitoring. This way our neural network has a lot of resources to practice the art of human free speech interpretation.

Thanks to this combination, we can not only ensure that the system is self-taught, but also – for an even deeper understanding – narrow the competence of the bot to the specialised area. And so, for the needs of customer service in banking, with over 23 billion statements available in our database, we extracted 7 million on banking, and our bot learned to interpret most common statements of people and respond in a way that is natural to the chosen style.

Here is an example:

As you can see in the film, the bot not only performs the command without error but also does not lose the main course of the conversation. Answering the question with a question is not confusing for the bot. It is still able to recognise the intent (question about the account balance in order to decide which of them to choose for the transfer). It responds remembering the main path of conversation and proposing a return to the discussed topic.

How natural language understanding works

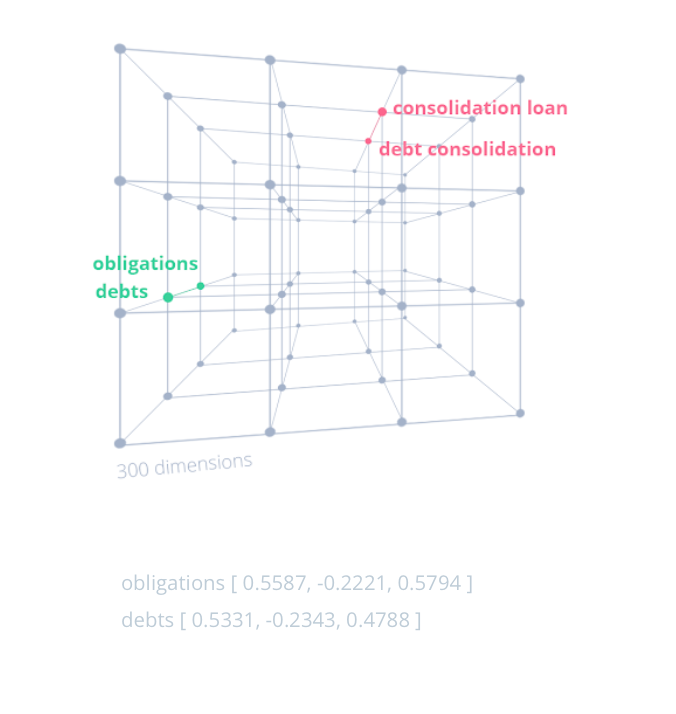

The effect of free speech interpretation model is a 300-dimensional semantic matrix. The number can be modified depending on the context, which places all words in this space depending on semantic similarity. Thanks to the huge amount of input data it is possible to generate the model also by omitting lemmatization – importing words to the original form without a change, which is remarkably time-saving, especially when it comes to Polish language with all the inflection forms (did you know that Polish verbs have around 12 conjugation paradigms and linguists are not sure how many are there exactly). The model can be refreshed to include new words.

How the intent recognition works

Based on a precise set of words, a semantic model of sentences is created in an analogical space of 300 dimensions, which allows recognising the similarity of intentions between sentences that use seemingly different words.

Depending on the model’s adaptation to the client’s challenges, it is possible to use language structures to improve the effectiveness of the solution. It can remove frequently repeated words of low significance, bring words to the basic form, correct typos, etc.

Learning model

The recognition module is based on the textual data of statements. They can be created manually unless there’s data or based on previous transcripts of recordings and statements from chats. The recognition module automatically segments conversations from the training set into predefined clusters.

Each group contains statements similar to each other semantically, i.e. those in which the same intention is expressed. Then there should be a manual verification – by an analyst who can merge smaller groups of statements expressing the same intention into larger groups. The person analysing the topic should also name all groups and verify the intentions for all the statements in the training set. On this basis, reference points located in the 300-dimensional matrix are created.

When a new query appears, it is converted into a vector form in the listed 300 dimensions, and then the nearest semantic reference point is searched. The intent of the reference point is the intent of the analysed query, and the distance in this space is the confidence level of the semantic match. That way it is possible to recognise that the statement is unknown in case of a voice redirection in IVR or, when it comes to a dialogue, that the user’s response is not in line with the scenario, so the conversation may be redirected to the consultant.

Voice call

The interaction starts with a message from the system. The user can respond by voice, by message, or by using a form. In the first case, the audio content is sent to the automatic speech recognition module (ASR), where it is automatically transcribed and placed on a common path in the above-mentioned matrix. All this, of course, happens in real time.

At this point, a multi-level analysis of statements takes place, which performs a multi-stage information extraction, using neural networks, and creates a multi-dimensional vector representation of all aspects, such as intention, information, attitude, or style of the user’s statements. Just like with text messages.

Interpretation of the statement is forwarded to the Dialogue Manager, who selects the optimal path of conversation based on the history of interaction with the user and additional info from the bot. Authorisations can be done in various ways, depending on the platform.

As a wise man said – the future is now

Automation definitely applies to all spheres of our lives. Especially business lives. The digital revolution is happening right before our eyes whether we believe it or not. The robots are already streamlining first line contact centres’ work (with the best results for repetitive, routine processes). Optimising the workload of customer service teams, supporting daily duties, speeding up issue-solving and solving simple problems.

Since at SentiOne we are rich in experience connected with internet monitoring, we are glad to introduce innovative services to our offer, hoping it will give fantastic opportunities to our clients – providing a universal, integrated, omnichannel customer service solution.